July 8, 2025 • 13 min read

Shadow AI: Understanding and Managing Unauthorized AI in Organizations

CX Analyst & Thought Leader

July 8, 2025

What Is Shadow AI?

Shadow AI is the unsanctioned and unmonitored use of AI applications in the workplace. When employees and other stakeholders use unapproved AI tools without proper IT oversight, they risk data breaches, contaminated or biased data, increased security vulnerabilities, and significant regulatory compliance penalties. All that adds up to the kind of reputational damage that most businesses will never fully recover from.

Shadow AI vs Shadow IT

Shadow AI is the natural evolution of Shadow IT, which is the unsanctioned and unmonitored deployment of hardware, software, and other IT systems on an enterprise network. Common examples of Shadow IT include personal cloud storage service, project management apps, or the use of personal laptops for work-related tasks.

Common Examples of Shadow AI

Let’s look at an infamous example of Shadow AI use: the 2023 Samsung trade secret leak.

When engineers at Samsung uploaded meeting transcripts and confidential source code into ChatGPT, their motives were innocent enough: they wanted to use the popular GenAI chatbot to create meeting summaries and debug the code. They didn’t realize, however, that sharing that data meant it would be incorporated into ChatGPT’s training models. They were also unaware that data entered into ChatGPT is stored on servers owned by OpenAI–and can be shared with other users as generated responses.

As a result, Samsung entirely banned the use of generative AI tools on company-owned devices and networks.

GenAI chatbots like ChatGPT are far from the only apps that encourage Shadow AI use.

Virtually every application today is either already completely AI-powered, or planning to implement AI features in the near future. This means Shadow AI is a risk anytime employees use analytics, knowledge management, contact center, marketing, productivity, and writing/editing tools.

Examples of Unintentional Shadow AI

Unintentional shadow AI use is the result of a lack of clear AI governance and oversight policies, company leadership’s unfamiliarity with AI, or the widespread accessibility of public AI tools.

Employees engage in unintentional shadow AI use when they:

- Use public GenAI tools without knowing the platform’s data retention and use policies

- Activate new AI features or upgrades within existing applications

- Upload company data to their personal AI app accounts, not their professional accounts

- Batch upload multiple business documents to AI apps without knowing some files contain sensitive data

Unintentional Shadow AI use is the result of a lack of clear AI governance and oversight policies, company leadership’s unfamiliarity with AI, or the widespread accessibility or public AI tools.

Examples of Intentional Shadow AI

Unfortunately, intentional Shadow AI use–where users knowingly flout company AI policies or deliberately continue using AI tools despite the risks–is increasingly common. About half of employees say they’ve knowingly uploaded confidential business information to public AI tools, and 3 in 5 employees have witnessed their coworkers using AI inappropriately.[*]

Intentional Shadow AI use is often a consequence of unrealistic performance expectations, the desire or pressure to increase productivity or embrace innovation, and a lack of approved alternative AI applications.

How Widespread Is Shadow AI?

AI use at work has grown by an astounding 6,000% in the past two years. About ⅔ of US employees regularly use AI at work, with 31% using AI on a daily or weekly basis.[*] Given the mass AI adoption, its unsurprising that Shadow AI has become a persistent problem. What is surprising is the frequency–and the intentionality–of unsanctioned AI use in the workplace.

With 90% of AI enterprise usage happening through unauthorized personal accounts, Shadow AI use is far more prevalent than most businesses know.[*]

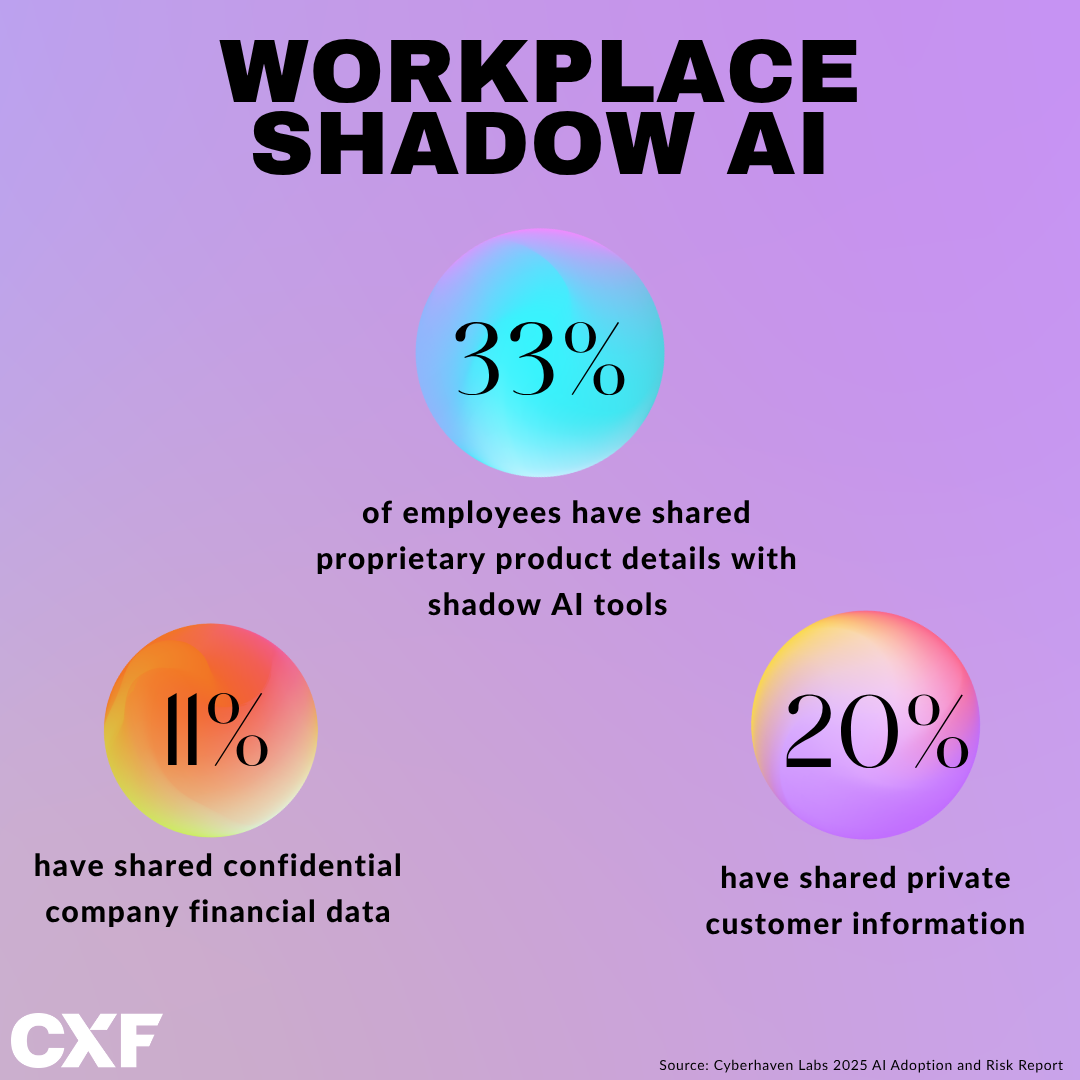

Nearly 35% of all the corporate data shared with AI apps is classified as sensitive. 33% of employees admit to sharing proprietary product details with AI tools, while 20% have shared private customer information.[*] Most alarmingly, 11% have shared confidential company financial data with AI tools.

What Causes Shadow AI Use?

There are multiple reasons for the popularity of shadow AI, including:

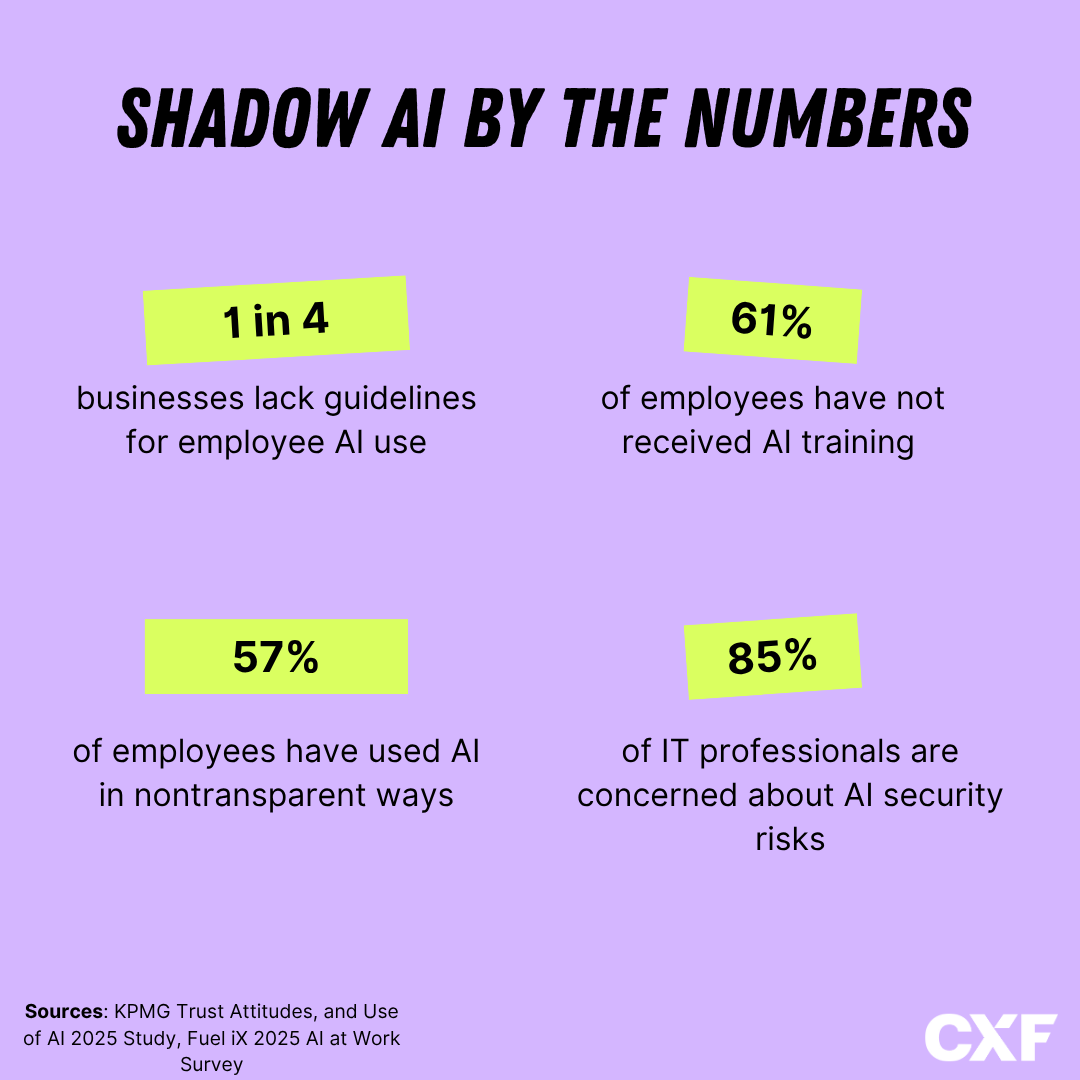

- Lack of Formal AI Governance Policies: Nearly ¼ of businesses lack any guidelines or policies regarding employee AI use–and many existing guidelines are too general or vague to be truly effective.[*] If employees don’t know the rules, they can’t be expected to follow them.

- AI Bans: Totally banning AI in the workplace makes employees more likely to use Shadow AI. 67% of employees working in AI-banned environments use Shadow AI, companies to only 33% of employees working in organizations without an active AI governance policy.[*]

- Limited Employee Training: 61% of employees report receiving no AI training at work, and almost half say their existing knowledge of AI is very limited.[*] Without proper training, employees may not even be aware of Shadow AI risks, much less understand how to mitigate them.

- Improved Workflows: AI tools don’t just make employees more productive–they make them more efficient. Roughly 90% of employees say AI has a positive impact on their work. 60% say AI helps them work faster, 57% say it makes their job easier, 49% say it improves their job performance, and 23% say AI makes their work more enjoyable.[*]

- Pressure To Adopt AI: Nearly half of employees worry about being left behind if they fail to use AI in the workplace.[*] Many are equally worried about being entirely replaced by AI, and feel improving their AI skill sets makes them a more valuable (and less replaceable) employee.

- Ease of Access To AI Tools: There are more easy-to-use AI apps than ever before–and most of them have free versions. Employees don’t have to look far to find the perfect purpose-built AI tool for their needs. This has led to widespread shadow AI use on a massive scale: 70% of employees now use free, publicly available AI tools, and 57% have used AI in non-transparent ways (like passing off AI-generated content as their own.)

- Lack of Quality AI Tools: An outdated or incomplete tech stack also drives Shadow AI use. If employers don’t provide team members with the software they need to get the job done, employees will absolutely fill in the IT gaps with their own tools.

The Risks and Challenges of Shadow AI

Regardless of the reason for Shadow AI use, the consequences are the same: financial penalties, shattered customer trust, and poor business decisions based on inaccurate data.

Security Vulnerabilities

85% of IT professionals are already concerned about the potential security risks of AI use, and those risks multiply when Shadow AI is added to the mix. Shadow AI apps operate outside of existing business security protocols, are rarely end-to-end encrypted, and have not been vetted by IT teams.

Once customer and internal business data is uploaded (logged) to an unauthorized AI app, enterprises lose data access and retention controls. The AI tool doesn’t just store logged data prompts–it leverages user data to improve. After that, data breaches can happen in several ways:

- Cross-Prompt Exposure: Sensitive data is indexed and reused in prompts across different systems, users, and AI applications

- AI Plug-Ins: AI plug-ins or browser extensions (especially autocomplete and writing/editing tools) expose sensitive data via “suggested” outputs and share it with other LLMs

- Third-Party APIs: Many AI apps share user data with external servers, making it virtually impossible to track the extent of the data breach

What’s at risk of being exposed? Trade secrets, source code and algorithms, customer PII, pricing models, contracts…you name it. And worse still? Breaches from shadow AI data take 20% longer to contain.[*]

Regulatory and Compliance Violations

Once those data leaks happen, businesses won’t just lose customers–depending on the industry, they could face heavy penalties, fines, legal trouble, and even closure.

Violating regulations like GDPR, HIPAA, and SEC is costly: shadow AI-related data breaches cost businesses an average of $5.27 million.[*]

Misinformation

Over half employees say they’ve made mistakes at work due to AI use, and 46% of IT leaders have had to correct inaccurate AI-generated results. This problem is exacerbated by the fact that 2 out of 3 employees admit they don’t bother to verify or review AI outputs.[*]

While AI can certainly do a lot, it’s far from foolproof. Popular GenAI tools are trained on massive amounts of low-quality, unverifiable end user data. Hallucinations, inaccuracies, and insidious biases abound.

First, data bias opens businesses up to major legal and reputational trouble. As of this writing, several federal lawsuits have been filed as a result of AI-based discrimination (automatically filtering out candidates based on race, disability, gender, educational background, etc.)

Even if discrimination lawsuits are avoided, too many businesses are making crucial and costly decisions based on out-of-context, inaccurate data.

Employee Deskilling and Low Employee Effort

72% of employees admit their use of AI has caused them to make less of an effort at work, and nearly half say they use AI in lieu of collaborating with their teammates or supervisors.[*]

Excessive AI use–whether via shadow AI tools or company-approved ones–leads to employee skill degradation, a loss of critical thinking skills, and decreased employee engagement.

The result of AI overuse is a workforce that becomes progressively less capable of independent thought and creative problem-solving.

Turning a blind eye to Shadow AI use may increase productivity, but it also creates a disengaged, de-skilled workforce that’s almost entirely dependent on AI to function.

Managing and Mitigating Shadow AI Risks

Given the severe consequences of Shadow AI use, many companies implement reactionary AI bans that do little more than stifle innovation and encourage continued Shadow AI use.

Effective Shadow AI risk management strategies aren’t about completely eliminating AI. They’re about proactive Shadow AI mitigation, transparency surrounding AI use, and clear, collaborative AI governance policies.

Managing Shadow AI use is a multi-step process:

Step 1: Establish Clear AI Use Policies+Governance Frameworks

AI Governance proactively ensures employees use AI ethically, securely, and responsibility by creating multi-layered, flexible policies surrounding acceptable

AI governance frameworks and acceptable use policies should:

- Classify AI tools as prohibited, limited-use, or approved

- Outline acceptable use cases for approved AI tools and AI monitoring strategies

- Create rules regarding the types of data that can be provided to AI apps

- Ensure compliance with industry regulations targeted by “AI workarounds,” like GDPR, HIPAA, PCI, etc.)

- Establish role-based access controls for AI apps and additional security guardrails

- Implement an AI incident response plan

Step 2: Choose+Integrate Approved AI Tools

Effective risk management means leadership must select which AI tools to approve for internal use. Above all, accepted AI tools must be as good as or better than the public Shadow AI apps employees are currently using. Otherwise, there’s no real incentive for employees to adopt approved options.

Prioritize customizable, user-friendly AI apps that easily integrate into existing workflows and require minimal training. Also focus on tools with robust privacy and security measures, industry-specific LLMs, and reliable customer support.

Successful adoption of approved AI tools requires rigorous employee training and a clear demonstration of value. For easy adoption, provide an internal, employee-facing “App Store” that lists (and includes download links for) approved AI tools. To encourage employee buy-in, leadership must champion early adopters, transparently address employee concerns about AI, and measure internal AI adoption.

Step 3: Provide Ongoing AI Upskilling+Training

Employees that have received AI training are almost twice as likely to trust and accept AI technologies as those who have never been trained.[*] While providing employees with technical training on how to use approved AI tools is essential, hands-on training alone isn’t enough.

Currently, less than half of employees receive AI safety training.[*] AI safety training should cover the risks and consequences of Shadow AI use, teach employees how to spot AI vulnerabilities, and actively encourage employee oversight of AI.

Step 4: Monitor AI Use+Add Technical Controls

Effective AI technical controls help businesses detect Shadow AI use, enforce acceptable policies, and mitigate the risks of AI. Leverage a series of AI monitoring and tracking tools to enforce compliance and security standards–and to discover when employees are using Shadow AI.

Data Security Posture Management (DSPM) solutions identify, classify, and protect sensitive data across multiple cloud services. AI monitoring platforms determine where AI is being used–and the specific employees using it–in an organization. GenAI firewalls control GenAI traffic, monitoring and blocking sensitive content shared with AI tools to ensure it can’t be input or retrieved in the future. AI-specific data loss prevention tools filter sensitive information to prevent data leaks, and can even block data copy/pastes in real-time.

Additional technical controls can include:

- Network segmentation/micro-segmentation for AI applications, AI training, AI APIs, or data environments

- Zero-trust policies for all AI systems

- Network scanners that identify unusual data sharing with AI apps, unauthorized accounts/access, or real-time suspicious activity

- Security measures like end-to-end encryption, data masking, multi-factor authentication, API rate limiting, and role-based access control (RBAC)

Step 5: Create an AI Incident Response Plan

Even the best governance policies can’t prevent some AI-related security issues.

To lessen their effect, have a clear AI incident response plan in place before anything happens. AI incident response plans should include escalation strategies, accountability chains, customer notification policies, and potential remedial action.

Best Practices For Shadow AI Management

These Shadow AI management best practices help businesses create sustainable, forward-looking AI governance policies that balance security and productivity:

- Lead By Example: Leadership should demonstrate that AI governance rules apply to everyone by only using approved AI tools. They should highlight their own positive experiences with approved tools to build employee confidence

- Continuously Update AI Policies: AI Governance policies, accepted AI applications, and employee training programs should be continually updated to ensure relevancy and accuracy.

- Support GRC Teams: Governance, Risk, and Compliance (GRC) teams require specialized tools to effectively manage AI risks. These tools include DSPM solutions, GenAI firewalls, and AI monitoring platforms that identify unauthorized AI usage.

- Prioritize Collaboration Over Restriction: Instead of issuing blanket AI bans, collaborate with employees to understand what tools they need, which ones they prefer, and specific features to look for.

- Balance Turnaround Time with Risk Mitigation: Employees will bypass lengthy AI approval processes. Keep evaluation criteria concise and set realistic timelines for approval requests (2-3 days).

From Shadow to Strategy: Transparency In Workplace AI

It’s not a question of if your employees are using Shadow AI, it’s a question of when–and how–you find out.

If left unchecked, Shadow AI causes costly compliance violations, security vulnerabilities, data inaccuracies, employee deskilling, and untold reputational damage.

Leadership must first directly address the root causes of Shadow AI use, like a lack of formal AI governance policies and insufficient employee training related to AI. They must also carefully select which AI tools to approve for employee use, conduct regular audits of AI deployments, and establish security and compliance guardrails.

Effective AI governance policies don’t restrict AI innovation. Instead, they allow employees to channel them safely and strategically.