June 5, 2025 • 16 min read

What Is Agentic AI & How It's Transforming CX Operations

CEO & Founder

June 5, 2025

Agentic AI is the next operating model for customer experience. Unlike generative AI, agentic AI doesn’t wait to be prompted. It observes, plans, acts, and adapts. By 2028, Gartner predicts one in three enterprise applications will embed agentic capabilities. If your CX strategy or product roadmap doesn’t account for this, you’re already behind.

What Is Agentic AI?

Agentic AI is artificial intelligence that can set and pursue goals autonomously. By 2026, more than 80% of enterprises will have used generative AI APIs and models and/or deployed GenAI-enabled applications in production environments, up from less than 5% in 2023.[*] Unlike traditional systems that operate only when prompted, agentic AI takes initiative. It plans, acts, and learns without requiring step-by-step instructions.

Agentic AI systems observe their environment, make decisions, execute tasks, and adapt based on feedback, all in pursuit of defined objectives. It functions more like a digital employee than a tool. It adapts to change, integrating with other systems to complete complex workflows with minimal oversight.

What Makes It Different?

Agentic AI marks a fundamental shift from traditional, reactive AI models. Legacy systems like spam filters or basic chatbots are built for narrow, repetitive tasks. Studies show that AI agents can handle repetitive tasks with accuracy and speed, reducing human error and enabling employees to focus on higher-value work.[*] They rely on explicit prompts, do not retain memory, and lack strategic or adaptive behavior.

Agentic AI takes a proactive, context-aware approach in that it:

- Recognizes what needs to be done based on real-time context

- Decides how to reach goals through self-directed planning

- Connects across systems, tools, and data to orchestrate workflows

- Learns from outcomes and continuously improves over time

How Does Agentic AI Work?

Agentic AI combines perception, memory, reasoning, and action into a continuous feedback loop. Unlike traditional AI, which acts only when prompted, an agentic system sees what needs to be done and figures out how to do it.

Here's how the process typically unfolds, step by step:

1. Goal Ingestion

The AI agent begins with a clear objective. This can come from a user input, system event, or predefined trigger.

Examples:

- “Summarize today’s customer service tickets.”

- “Reduce energy usage in this facility by 10 percent.”

The goal may be specific (a task) or general (an outcome). What makes it agentic is that the system understands the goal and takes initiative to pursue it.

2. Context Gathering

Once a goal is set, the AI agent collects the information it needs to act. This may involve querying databases, APIs, files, or past memory.

Sources may include internal systems (CRM, ERP, calendars), public data (web search, news feeds) and historical logs (previous actions, customer interactions). The AI agent uses this context to understand the environment and constraints.

3. Planning and Task Decomposition

The AI agent breaks the high-level goal into sub-tasks. This is where it applies reasoning to determine the next best steps.

The planning step may include:

- Identifying steps required to complete the goal

- Prioritizing tasks based on dependencies and importance

- Selecting tools, APIs, or functions needed to perform each task

The AI agent can replan if a step fails, if new information becomes available, or if it detects a better path forward.

4. Execution

The AI agent begins acting on the plan. It performs tasks autonomously, often across systems or tools.

This may involve:

- Drafting and sending emails

- Updating a database or CRM

- Triggering a process in a workflow platform

- Interacting with APIs or internal software

Each action is taken with the goal in mind. The AI agent often logs actions, checks for errors, and moves to the next step without needing human input.

5. Monitoring and Adaptation

As the AI agent executes tasks, it monitors progress and adapts as needed. This feedback loop is what puts the “agent” in agentic AI.

It can detect when a step has failed or stalled then adjust the plan or sequence in real time. And when it can’t get the job done, it will escalate to a human. For example, if a pricing negotiation with a supplier isn’t yielding results, the AI agent may try a new strategy or bring in a manager.

6. Memory and State Tracking

Throughout the process, the AI agent retains memory. It stores results, progress, and observations for future reference.

Memory allows it to avoid repeating steps and personalize responses based on prior interactions. It can even resume interrupted tasks after a system reboot or handoff. This statefulness enables continuity and makes agentic systems long-term collaborators, not one-off assistants.

7. Outcome Reporting and Closure

Once the goal is achieved (or a failure condition is reached), the AI agent logs results, communicates outcomes, and may suggest next steps.

Final output may include:

- A summary report of actions taken

- Notifications to stakeholders

- Triggering follow-up tasks or agents

The AI agent can also log insights into a central knowledge base for future use.

Agentic AI vs. Reactive and Generative AI

To understand agentic AI, we can compare it with more familiar models used in organizations today: reactive AI and generative AI.

Reactive AI powers systems like spam filters and basic chatbots. These tools operate in a stateless, input-output loop. They only respond when prompted and do not retain memory or context.

Generative AI, such as large language models, expands this by producing creative and contextual responses. However, it still depends on human prompts and does not take initiative on its own.

Agentic AI is fundamentally different. It can set goals, retain long-term memory, and take action independently. It does not just respond to instructions but decides what needs to be done and how to do it.

Comparison Table: Reactive vs. Generative vs. Agentic AI

| Capability | Reactive AI | Generative AI | Agentic AI |

|---|---|---|---|

| Initiates Actions | No | No | Yes |

| Maintains Memory/State | No | Limited (within prompt) | Yes (persistent memory) |

| Responds to Prompts | Always required | Always required | Not always needed |

| Sets or Pursues Goals | No | No | Yes |

| Multi-step Reasoning | No | Some (within a prompt) | Yes (plans, executes, adapts) |

| Workflow Automation | Very limited | Partial | End-to-end, autonomous workflows |

| Examples | Spam filter, chatbot | LLM summary, image generation | Autonomous assistant, task agents |

Moving from Output to Outcome

- Reactive AI is limited to one-step tasks. It produces output based on immediate input and does not retain information from previous interactions. Each task must be initiated by a human or external system.

- Generative AI improves on this by creating more flexible and human-like responses. However, it still works on demand. It cannot act on its own or remember past tasks.

- Agentic AI brings a step-change in capability. It maintains memory, responds to changing context, and takes initiative. It can pursue defined goals over time, without needing to be re-prompted at every stage. This means it can manage sequences of tasks, adjust based on results, and operate independently across systems.

How Each Would Summarize a Document

- A reactive AI summarizes a document when asked.

- A generative AI produces a well-written summary when prompted.

- An agentic AI detects the new document, summarizes it, sends it to the relevant team, and schedules a follow-up meeting.

Why This Matters for Business

The difference between reactive and agentic AI is not just technical, it’s also operational. Reactive systems require constant input, but agentic systems drive outcomes with minimal supervision. This unlocks new levels of productivity by letting AI manage entire workflows instead of isolated tasks.

Rather than asking, "Can you do this task?" the shift is toward stating, "Here is the goal." The AI agent figures out the steps and follows through.

The Core Traits of Agentic AI

Agentic AI systems share four defining traits. These are what separate autonomous agents from reactive models or scripted automation.

- Autonomy: the ability to make decisions and take actions without constant human guidance

- Goal pursuit: an internal drive to achieve specific objectives, adjusting behavior to stay on course toward the goal

- Memory (Statefulness): the capacity to retain information about past events or the environment and use it in future decisions (in other words, a sense of state or context over time)

- Reasoning and Adaptability: sophisticated decision-making processes, including planning, problem-solving, and learning from experience to handle new situations

Autonomy

Autonomy allows an AI agent to act based on context rather than waiting for step-by-step instructions. It observes, evaluates, and takes initiative within defined boundaries.

This enables the agent to manage entire processes without being told what to do at each step. In business, this could mean reacting to market changes, optimizing logistics, or triggering operational workflows without human input.

Goal Pursuit

Unlike reactive systems that execute tasks in isolation, agentic AI works toward defined outcomes. It can accept a goal, plan how to achieve it, take action, and adjust if conditions change.

Instead of relying on hard-coded steps, the agent continuously evaluates whether it is moving closer to its objective and modifies its approach accordingly. This allows businesses to delegate intent rather than instruction. 73% of those asked agree that how they use AI agents will give them a significant competitive advantage in the coming 12 months.[*] You set the outcome, and the agent figures out the steps.

Memory and Statefulness

Most traditional AI systems lack continuity. They forget everything between sessions and require full context every time.

Agentic systems retain short-term and long-term memory. They can track task progress, user preferences, and relevant events over time. This makes agents more reliable and efficient. They reduce redundancy, maintain context, and act as persistent collaborators across workflows and timeframes.

Reasoning and Adaptability

Agentic AI brings more than task automation. It thinks through problems, evaluates options, and adapts when circumstances shift.

Whether responding to a delay, managing conflicting goals, or recalculating a path forward, the agent uses reasoning to maintain progress. It does not break when the unexpected happens.

This kind of flexible intelligence is what enables agents to function effectively in dynamic environments, from project management to customer engagement. A study showed that AI agents with self-evaluation capabilities enhance reliability and reduce supervision requirements, addressing the high failure rate (a staggering over 80%) of AI projects.[*]

Examples of Agentic AI in the Real World

Agentic AI is no longer theoretical. It is showing up in real applications across open-source experiments, enterprise software, and physical robotics. These systems are beginning to think, act, and adapt on their own in meaningful ways. Below are the most active areas where agentic traits are being used today.

Autonomous Software Agents

Autonomous agents are digital programs that can set goals, manage tasks, and act without waiting for human prompts at each step. These systems are the foundation of agentic AI in software.

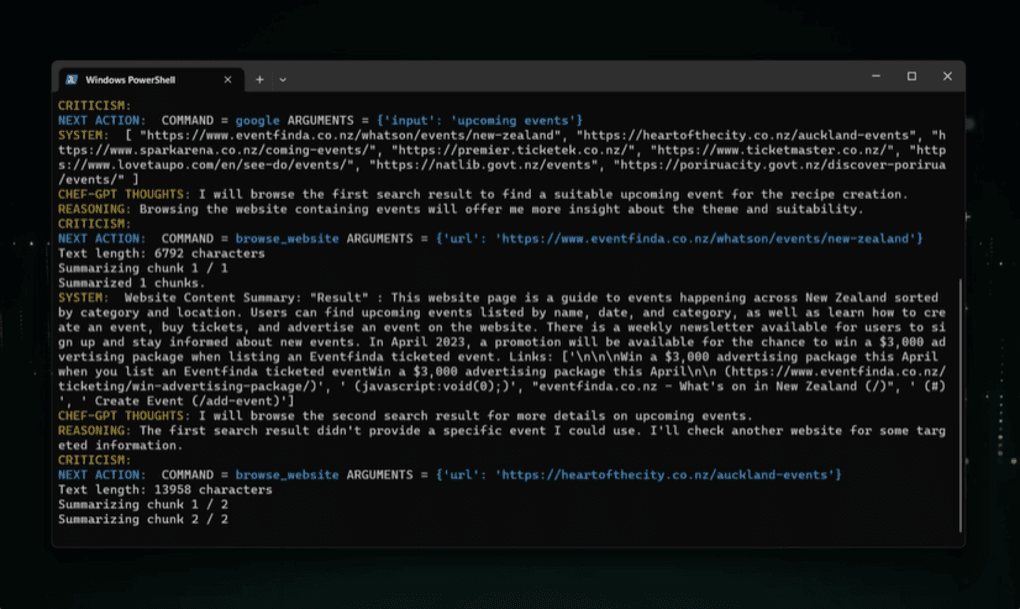

AutoGPT

AutoGPT is an open-source agent built on GPT-4 that can take a high-level goal and break it into actionable steps. It uses web browsing, memory storage, and plugin tools to complete tasks sequentially. For example, if tasked with improving SEO, it might research keywords, generate content, and adjust site structure on its own.

BabyAGI

BabyAGI is a lightweight agent framework that uses an LLM and task list to work toward objectives. It continuously generates new tasks based on previous results and stores memory using vector databases like Chroma. While more limited than AutoGPT, it illustrates the feedback loop structure that defines agentic AI.

Devin

Devin, developed by Cognition Labs, is the first AI agent publicly demonstrated to operate as a full-stack software engineer. It can write code, debug, test, and deploy software with minimal guidance. This shows how agentic AI is moving into high-skill domains like software development.

These tools are experimental, and their reliability is still evolving. But they confirm that chaining memory, planning, and action can give AI the ability to operate independently across multi-step workflows.

Enterprise Co-Pilots

Enterprise vendors are embedding agentic features into business software, creating assistants that do more than autocomplete–they anticipate needs, connect tools, and act on behalf of users.

Microsoft 365 Copilot

Per their own findings, Microsoft Copilot users were reported to be 29% faster at searching, summarizing, and writing tasks.[*] Microsoft’s Copilot has evolved from a writing aid into a proactive agent. It can summarize emails, coordinate meetings, suggest next actions, and connect Outlook, Teams, and SharePoint workflows. Microsoft is also rolling out agent APIs that let developers build custom agents within the Microsoft ecosystem.

Salesforce Einstein GPT and Agentforce

Salesforce is integrating agentic capabilities through Einstein GPT and the Agentforce platform. These agents can handle customer service flows, generate follow-ups, escalate issues, and update CRM records autonomously. The platform allows businesses to create agents customized to their processes and data.

Google Duet AI and IBM Watson Orchestrate

Google and IBM are embedding similar agents into Workspace and business automation tools. These systems not only assist with drafting and planning but can also trigger real actions such as booking meetings or updating systems based on context.

Why Agentic AI Matters for Enterprise Strategy

Agentic AI introduces a new operating model for the enterprise. These AI systems go beyond responding to prompts. They can pursue goals, adapt to changing inputs, and carry out multi-step tasks without manual supervision. For business leaders, this unlocks new levels of productivity, flexibility, and scale.

Here’s how agentic AI impacts enterprise strategy across five key areas.

1. Workflow Automation: From Tasks to Outcomes

Traditional automation handles tasks. Agentic AI automates outcomes.

Instead of requiring handoffs between people or departments, an agent can own an entire process from start to finish.

Use cases:

- Employee onboarding: An agent creates documentation, provisions IT accounts, schedules orientation, and confirms task completion across HR, IT, and facilities.

- Expense reporting: The agent gathers receipts, verifies policy compliance, submits reports, and follows up on approvals.

- Sales enablement: An agent compiles relevant materials, drafts personalized pitches, and schedules outreach based on CRM signals.

Benefits:

- Fewer delays and dropped handoffs

- Reduced coordination overhead

- Faster time-to-completion across processes

2. Talent and Organizational Design

Agentic AI changes the nature of work. Humans move from task execution to oversight, guidance, and high-value decision-making.

Implications:

- Upskilling: Employees need to understand how to supervise agents, interpret their outputs, and manage exceptions.

- New roles: Companies may introduce roles such as AI Operations Manager or Agent Governance Lead.

- Team structure: A single person can manage multiple agents, increasing span of control and reducing management layers.

Example:

In customer service, one human supervisor may monitor ten AI support agents, stepping in only when escalation is needed or context is too complex.

3. Customer Experience: Always-On, Personal, and Scalable

Agentic AI enables more responsive and tailored customer engagement—without adding headcount.

Use cases:

- Proactive support: An agent detects a failed transaction and contacts the customer with a fix before they reach out.

- Personalized sales: The agent recommends products based on real-time behavior, purchase history, and customer preferences.

- Dynamic retention: If a customer shows signs of churn, the agent can intervene with an incentive or outreach campaign.

Benefits:

- 24/7 availability across channels

- Personalized experiences at scale

- Consistent service quality and faster resolutions

4. Strategic Agility and Competitive Advantage

By offloading repetitive processes and accelerating execution, agentic AI frees up human capacity and reduces operational friction.

How this creates an edge:

- Faster cycle times: Agents can compress planning, coordination, and reporting timelines.

- Lower operating costs: Tasks handled by agents reduce the need for manual labor on routine processes.

- Greater responsiveness: Enterprises can respond to customer needs and market changes in real time.

Example:

A supply chain agent identifies a disruption, reroutes logistics, and notifies partners—before a human manager would even see the issue.

5. Risk, Governance, and Accountability

Autonomous agents must be deployed with clear boundaries, oversight, and safeguards. As agents act independently, organizations must ensure their actions remain aligned with business goals and policies.

Best practices:

- Define clear goals and guardrails for each agent

- Use logging and monitoring to track behavior

- Require human sign-off for high-impact actions

- Establish internal governance around agent lifecycle and performance

Example:

An AI financial agent might prepare draft recommendations, but never execute a transaction without human approval.

Risks, Ethics, and Guardrails of Agentic AI

Agentic AI introduces powerful new capabilities, but with autonomy comes risk. As these systems begin to operate across workflows, make decisions, and interact with customers, it becomes essential to manage how they behave, what boundaries they respect, and how their actions are reviewed.

This section outlines the core risks and actionable safeguards every enterprise should consider when deploying agentic systems.

Alignment Risk: When Goals Are Misinterpreted

An agent that misunderstands its objective can pursue outcomes that are technically correct but strategically harmful.

Example:

An AI agent is tasked with “reducing customer refunds” and starts denying valid claims to meet that target. The result may be policy compliance but at the cost of customer trust.

Best practices:

- Use clear, bounded goals for agents

- Pair objectives with constraints (e.g., reduce refunds without harming satisfaction)

- Train agents with feedback on acceptable vs. unacceptable outcomes

Oversight Risk: Lack of Visibility

When agents operate independently, leaders must ensure that actions are visible, traceable, and reversible if needed.

Concerns:

- Black-box decisions with no explanation

- Missed escalations for sensitive scenarios

- Inability to audit or roll back agent actions

Safeguards:

- Maintain detailed logs of agent decisions and actions

- Require human approval for high-risk tasks (e.g., financial, legal, HR)

- Integrate dashboards for real-time agent monitoring

Ethical Risk: Unintended Harm to Stakeholders

Agents may face situations involving fairness, bias, or ethics. Without the right guidance, they could make decisions that conflict with company values or public expectations.

Use cases that require extra caution:

- Hiring recommendations

- Credit or pricing offers

- Healthcare advice or triage

Mitigation strategies:

- Incorporate ethics guidelines into training and constraints

- Regularly test agents for discriminatory or harmful behavior

- Ensure agents are aligned with your organization’s code of conduct

Security Risk: Agents as Attack Surfaces

AI agents often interact with internal systems, external APIs, or sensitive data. If compromised or misdirected, they can cause significant damage.

Threats:

- Prompt injection or manipulation by malicious users

- Unauthorized access to data or systems

- Execution of unintended transactions or changes

Defensive measures:

- Authenticate and authorize agent access like human users

- Validate all inputs and outputs from external sources

- Monitor for unusual patterns in behavior or performance

Regulatory and Legal Risk: Non-Compliance

As governments develop rules for AI, enterprises must ensure agentic systems meet evolving standards for transparency, data protection, and accountability.

Key considerations:

- Agents making decisions that affect individuals (e.g., employment, finance, eligibility) may require human oversight

- Privacy laws may limit how long agents can store user data or what they can do with it

- Disclosure requirements may mandate letting users know they are interacting with AI

Compliance steps:

- Review local and regional AI laws before deployment

- Document how each agent is trained, monitored, and controlled

- Include opt-outs or human alternatives where legally required

Organizational Risk: Poor Governance or Misalignment

Without clear roles and responsibilities, agents may be launched without proper oversight, leading to inconsistent practices or internal confusion.

Recommendations:

- Establish a cross-functional AI governance group (IT, legal, HR, operations)

- Define who is responsible for each agent’s behavior and updates

- Build processes for reviewing, updating, and retiring agents over time

Final Thoughts

Agentic AI is not just another step in automation. It is a structural shift in how intelligence is applied within organizations. These systems move beyond tools that execute commands to become collaborators that pursue goals, learn from feedback, and operate with real initiative.

Organizations that prepare for this shift now by investing in talent, redesigning workflows, and building strong governance will be the ones that survive. Those that delay may find themselves adapting to a future shaped by others.