July 24, 2025 • 18 min read

What is AI Orchestration? How it Works & Use Cases

Head of Content Research

July 24, 2025

AI orchestration coordinates multiple AI models, data pipelines, and infrastructure components to work as unified systems. The demand for robust orchestration solutions is increasing as companies deploy increasingly complex AI ecosystems. According to Gartner, 55% of organizations now have an AI board, and 54% have appointed an AI leader responsible for orchestrating AI activities, underscoring how essential formalized AI governance and leadership have become for operationalizing AI at scale.

This guide explains AI orchestration fundamentals, implementation strategies, available tools, and practical steps for deployment across industries.

What Is AI Orchestration?

AI orchestration is the coordinated management of AI models, data pipelines, and infrastructure so they work together efficiently. Like a conductor directing musicians in an orchestra, orchestration ensures each AI component contributes to a unified outcome rather than performing independently.

Without orchestration, an e-commerce site might run separate systems for recommendations, inventory, and pricing. Each system works well alone but makes decisions without considering the others. With orchestration, these systems share data and coordinate decisions. The recommendation engine suggests products that are in stock, at optimal prices, with accurate delivery estimates.

AI Orchestration vs. Traditional Automation & ML Orchestration

Not all automation is orchestration. This section explains how AI orchestration differs from traditional automation and classic ML orchestration.

AI Orchestration vs. Workflow Automation

AI orchestration manages entire AI ecosystems with intelligent decision-making capabilities that adapt to changing conditions and context, while workflow automation handles specific, predefined tasks with fixed logic and predetermined sequences.

Workflow automation excels at routine processes: data entry, file transfers, email notifications following predetermined rules. AI orchestration enables dynamic, context-aware responses that consider multiple factors and adapt behavior based on current conditions.

Example: Workflow automation sends confirmation emails when forms are submitted. AI orchestration analyzes form content, determines customer intent, checks inventory availability, generates personalized responses, and escalates to appropriate specialists when needed.

AI Orchestration vs. ML Orchestration

AI orchestration manages business processes that happen to use AI components alongside other systems.

ML orchestration manages the technical aspects of machine learning model development, training, and deployment.

| Aspect | AI Orchestration | ML Orchestration |

|---|---|---|

| Scope | Entire AI ecosystem: LLMs, agents, APIs, databases, external services, business logic | ML model pipelines: training, deployment, monitoring, experimentation |

| Primary Goal | Multi-system intelligent workflows and business process automation | Model lifecycle management and ML pipeline automation |

| Inclusion of Non-ML Systems | Yes - integrates databases, APIs, business applications, human workflows | Limited - focuses primarily on ML-specific components and processes |

| User Profiles | Business analysts, solution architects, system integrators, domain experts | Data scientists, ML engineers, MLOps specialists |

| Typical Tools | LangChain, CrewAI, n8n with AI connectors, business process platforms | MLflow, Kubeflow, Airflow for ML pipelines, experiment tracking tools |

| Example Use Cases | Customer service automation, multi-agent business processes | Model training pipelines, A/B testing frameworks, model deployment |

AI Orchestration vs. AI Agents

AI orchestration coordinates multiple agents and other AI components while ensuring policy compliance, resource optimization, and system-wide coherence. Orchestration manages the environment in which agents operate.

AI agents are autonomous systems performing specific tasks independently within their domain expertise. They make decisions based on training and instructions, executing actions without external coordination.

| AI Agents | AI Orchestration |

|---|---|

| Autonomous task execution within defined scope | Coordinates agents plus databases, APIs, business logic |

| Independent decision-making based on training | Manages policies, resource allocation, compliance across systems |

| Examples: Customer service chatbot, code generation assistant | Examples: Multi-agent customer service platform, enterprise AI workflow |

| Focus: Task completion and domain expertise | Focus: System coordination and business process optimization |

Example: Agents are like specialized employees handling assigned responsibilities. Orchestration is like management coordinating employee activities, allocating resources, ensuring compliance, and optimizing overall organizational performance.

Why AI Orchestration Matters

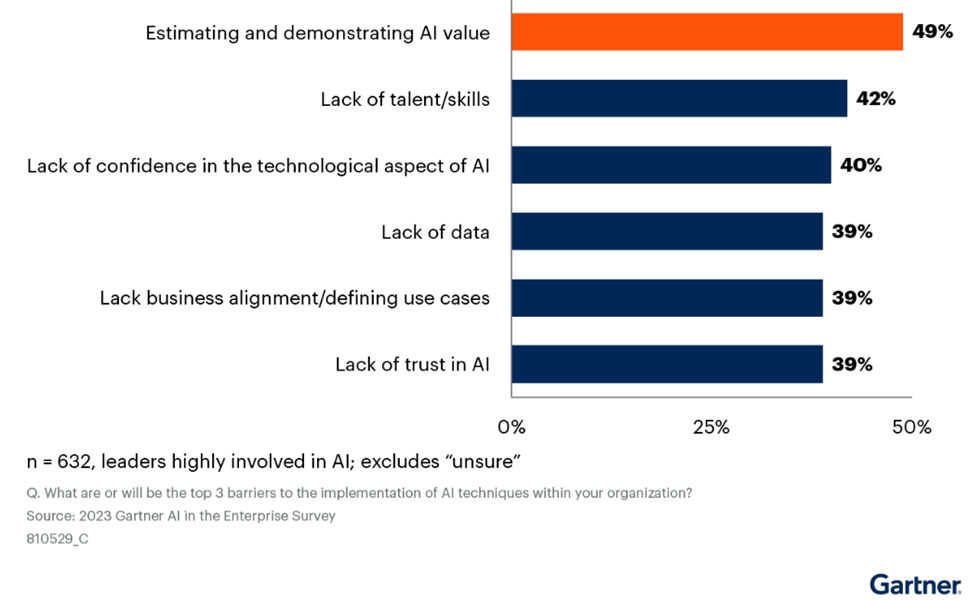

Gartner reports that only 48% of AI projects reach production successfully, and it takes 8 months to go from AI prototype to production. The primary barriers are demonstrating AI value and lack of talent and skills. Organizations have access to hundreds of AI models and services but struggle to make them work effectively.

The proliferation of large language models has created new challenges. Companies deploy GPT-4 for content generation, Claude for analysis, and specialized models for specific tasks. Without orchestration, these systems duplicate efforts, create inconsistent outputs, and require manual coordination.

This complexity has driven the emergence of AIOps and LLMOps as distinct disciplines focused on managing multi-model AI deployments.

Here are some of the business benefits of AI orchestration:

- Operational Efficiency: Orchestrated systems eliminate redundant processing and manual intervention. Customer service AI that accesses inventory, history, and product data simultaneously reduces resolution times from hours to minutes.

- Scalability: Orchestration platforms automatically allocate resources based on demand. E-commerce sites scale recommendation engines during peak shopping while reducing background processing, optimizing costs without performance degradation.

- Operational Cost Reduction: AI orchestration reduces infrastructure costs by 30–40% by matching resources to real workloads and eliminating the inefficiencies of isolated systems.

- Accelerated Development: Teams focus on business logic rather than integration complexity. Adding new AI capabilities to orchestrated systems takes days instead of months required for custom integrations.

- Enhanced Fraud Detection Accuracy: By orchestrating multiple AI models that integrate transaction data, customer interactions, and behavioral analytics, financial organizations improve fraud detection accuracy by up to 20% compared to isolated AI systems.

- Collaboration & Visibility: Centralized dashboards show AI component interactions, enabling teams to troubleshoot issues and optimize performance across the entire system.

- Compliance & Governance: Consistent application of security policies, audit trails, and regulatory compliance across all AI systems through centralized orchestration controls.

- Adaptability: Dynamic adjustment to changing conditions. If one AI service fails, orchestration automatically routes requests to backup systems without service interruption.

- Decision Intelligence: Bridges data analysis and actionable outcomes. Instead of generating reports requiring human interpretation, orchestrated systems automatically trigger appropriate business responses.

How AI Orchestration Works

AI orchestration works by coordinating agents, models, tools, and data into a single automated system that can plan, adapt, and execute tasks based on real-time input. Instead of scripting each step manually, you define goals, and the orchestration framework decides how to reach them.

In this section, you’ll learn how orchestration systems are structured, what technologies power them, and how they function in real-world workflows.

Core Layers of AI Orchestration

Every orchestrated system relies on four functional layers. Each one plays a specific role in controlling logic, managing memory, running models, and ensuring accountability.

1. Execution Layer

The execution layer controls the flow of tasks. It decides which agents to activate, when to trigger tools, and how to respond if something fails. You can use decision trees, planning graphs, or LLMs to generate action chains.

This layer supports:

- Task routing and agent sequencing

- Conditional logic and retry policies

- Parallel task execution and interrupt handling

2. Memory and Context Layer

This layer stores the information that agents need to reason correctly. It includes short-term memory (like current task state) and long-term memory (like customer history or embeddings).

You often connect this layer to:

- Vector databases (e.g., Pinecone, Weaviate)

Structured stores (e.g., Redis, Postgres) - Retrieval-augmented generation (RAG) pipelines

3. Model and Tool Layer

This is where your LLMs, APIs, search tools, and domain-specific models live. The orchestrator selects which tools to call based on the task and policies you define.

It supports:

- Tool selection based on task type or confidence

- LLM orchestration with fallback options

- Rate-limiting and access control

4. Audit and Monitoring Layer

This layer logs every action the system takes. You use it to debug workflows, enforce compliance, and measure system performance.

You’ll log:

- Prompt and response data

- Model usage and latency

- Agent paths and error points

Supporting Technologies

These technologies make orchestration systems scalable, secure, and reliable.

APIs and Messaging

Orchestrators use APIs to connect to tools, models, and services. REST and gRPC are common. For complex workflows, event-driven messaging (like Kafka or NATS) enables asynchronous steps and real-time communication.

Cloud and Containerization

You typically deploy agents and models in containers. Kubernetes handles scaling, load balancing, and fault recovery. Serverless setups are common for lightweight orchestration logic.

Frameworks Built for Orchestration

Modern platforms help you manage the complexity:

- LangGraph creates agent workflows using a graph-based structure

- CrewAI coordinates multi-agent setups with role assignments

- Flyte and Prefect manage Python-native workflows with retries, scheduling, and logging

- MLflow and Kubeflow handle ML experiment tracking and pipeline deployment

Real Example: AI-Powered Customer Support

Here’s how orchestration runs behind the scenes:

- A user sends a support request.

- The execution layer routes the task to an intent classifier.

- The memory layer pulls past conversation history and product details.

- The model layer calls an LLM to generate a draft response.

- A validator tool checks the tone and filters any sensitive data.

- The audit layer logs the full flow for quality review and training.

That’s not six isolated steps. That’s one orchestrated workflow.

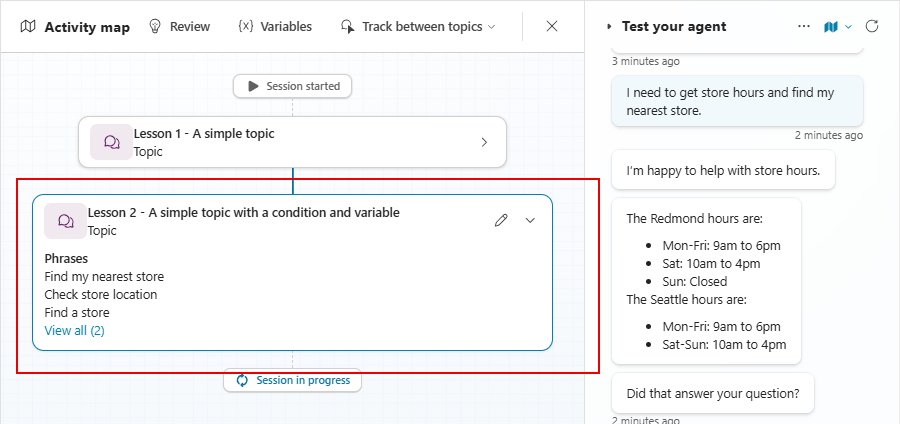

As Microsoft explains in its Copilot orchestration guide, generative orchestration doesn’t follow fixed steps. It selects agents and tools dynamically based on what the user wants and what the system knows.

Use Cases and Industry Applications

AI orchestration is only useful if it solves real problems. In this section, you’ll see how businesses apply orchestration to real-world workflows. These include autonomous support agents, research automation, and decision systems in finance, healthcare, and law. Each use case shows how coordinated models and tools outperform isolated AI tasks.

Customer Support & Chatbots

Modern customer support orchestrates large language models for language understanding, knowledge bases for accurate answers, CRM systems for customer context, and sentiment analysis to assess tone and urgency.

When customers reach out, orchestration analyzes the inquiry, retrieves relevant context, selects the optimal response approach, and escalates to human agents when needed. According to a Forrester study commissioned by Avaya, 52% of decision-makers prioritize AI to increase support efficiency, and 45% plan to implement orchestration tools within a year to improve customer journey optimization and real-time engagement.

E-Commerce & Retail Personalization

Retailers orchestrate recommendation engines using purchase and browsing data, dynamic pricing tools that respond to demand shifts, and fulfillment systems that adjust based on real-time inventory.

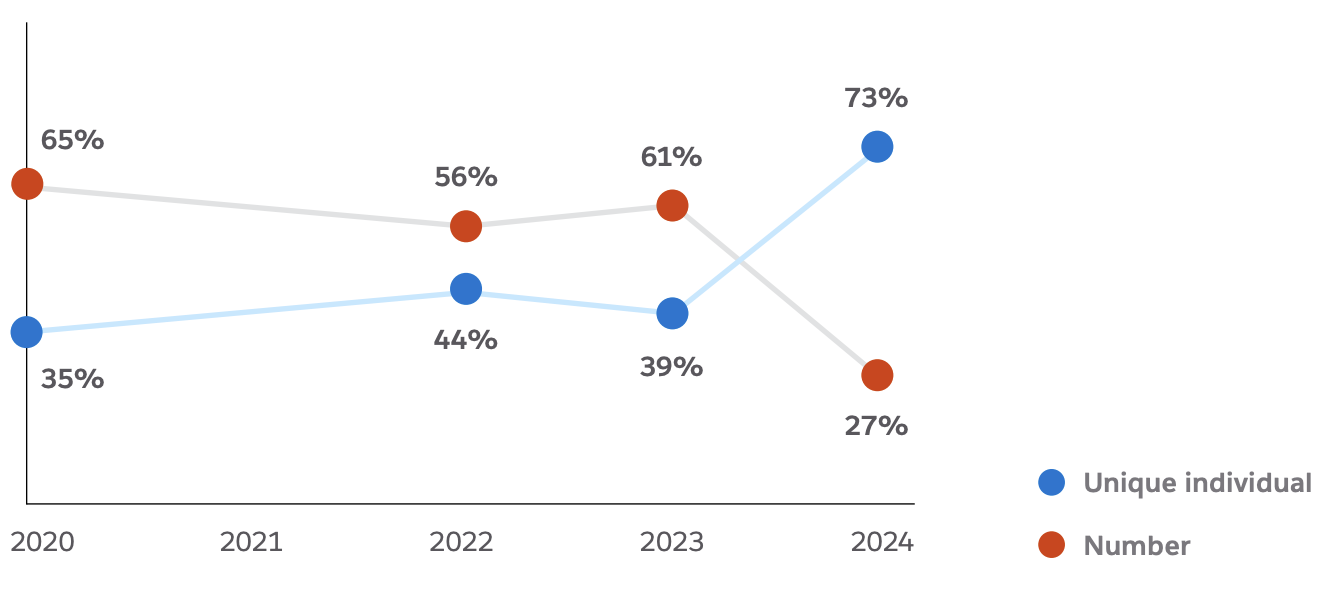

This coordination helps deliver personalized shopping experiences by showing the right products, at the right price, with accurate delivery estimates. According to Salesforce, the share of customers who feel brands treat them as unique individuals jumped from 39% to 73% in the past year. However, only 49% believe their data is used in a way that benefits them, highlighting the importance of trust and transparency in AI orchestration.

Healthcare & Diagnostics

Healthcare orchestration integrates imaging analysis (X-rays, MRIs, CT scans), natural language processing for clinical notes, and predictive models for assessing risk and treatment outcomes.

This layered approach enhances clinician decision-making by catching early-stage disease patterns, recommending personalized treatments, and flagging risks such as drug interactions. Deloitte highlights how AI-powered imaging and electronic health records can improve diagnostic accuracy and speed. When integrated effectively, AI augments clinicians rather than replaces them. The result is earlier intervention, better patient outcomes, and improved continuity of care.

Finance & Fraud Detection

Financial institutions orchestrate transaction pattern analysis, geolocation monitoring for unusual activity, social network analysis for coordinated fraud detection, and real-time risk scoring combining all insights.

Orchestrated fraud detection achieves high accuracy while minimizing legitimate transaction blocks. Coordination between detection approaches catches sophisticated fraud attempts that evade individual models.

Supply Chain & Manufacturing

Manufacturers orchestrate predictive maintenance models with production planning and quality control systems. Edge sensors collect equipment data, cloud analytics predict service needs, production tools adjust schedules, and quality systems monitor for deviations.

According to Freshworks, AI trendsetters in manufacturing achieve 16-second first response times and resolve issues in just 2 minutes in conversational support. In ticketing, they reach 11-minute first responses and 47-minute resolution times, far outperforming organizations without AI orchestration. These gains show how orchestration improves responsiveness, maintains quality, and reduces operational friction across the supply chain.

Additional Sectors

- Energy companies orchestrate smart grid systems balancing supply and demand in real-time, integrating weather forecasting, consumption prediction, and renewable energy optimization.

- Transportation orchestrates route optimization, vehicle maintenance, and traffic management to improve logistics efficiency and safety.

- Education platforms coordinate personalized learning algorithms, progress tracking, and content recommendations to adapt experiences to individual students.

- Legal services orchestrate document analysis, case research, and compliance monitoring to improve research efficiency and ensure regulatory compliance.

Tools and Platforms for AI Orchestration

Choosing the right orchestration platform depends on your goals, team structure, and tech stack. Below are popular tools organized by core functions. Each category supports a specific use case, whether you're building multi-agent LLM systems, managing ML pipelines, or integrating AI into business workflows.

Agent-Based & LLM-Centric Frameworks

- LangChain provides comprehensive frameworks for building LLM applications, including chain orchestration, memory management, and tool integration. Particularly effective for document analysis and conversational AI.

- AutoGen enables multi-agent conversations where different AI agents collaborate to solve complex problems. Effective for scenarios requiring multiple perspectives or specialized expertise.

- CrewAI coordinates teams of AI agents with defined roles and responsibilities. Excels in project management and collaborative problem-solving scenarios.

- Haystack specializes in search and question-answering applications, orchestrating document retrieval, processing, and response generation.

- LangGraph provides visual workflow design for LLM applications, simplifying complex agent interaction design and debugging.

- LlamaIndex excels at orchestrating data retrieval and analysis workflows, particularly for enterprise knowledge management.

- Microsoft AutoGen facilitates multi-agent conversations with role-based collaboration capabilities.

- Open Interpreter enables natural language control of orchestrated AI systems through conversational interfaces.

- Orby AI focuses on business process automation with AI agents that learn and replicate human workflows.

- SuperAGI provides agent orchestration with planning, reasoning, and tool-use capabilities.

- Botpress offers conversational AI orchestration with visual flow design and integration capabilities.

Workflow & Pipeline Orchestration for Data Scientists

- Apache Airflow provides directed acyclic graph (DAG) management for complex data workflows. Widely adopted for ETL processes and ML pipeline orchestration.

- Dagster offers asset-based orchestration with strong data lineage tracking and testing capabilities. Particularly effective for data-intensive applications.

- Flyte provides Kubernetes-native workflow orchestration with reproducibility and version control features for ML workloads.

- Kedro offers opinionated ML project structure with pipeline orchestration capabilities.

- Kubeflow delivers comprehensive ML orchestration on Kubernetes, including pipeline management, model serving, and experiment tracking.

- MLflow focuses on ML lifecycle management: experiment tracking, model packaging, and deployment orchestration.

- Metaflow provides human-friendly ML orchestration with strong data science workflow support.

- Ray Serve provides scalable model serving and orchestration for distributed AI applications.

- SynapseML enables large-scale machine learning on Apache Spark with orchestration capabilities.

- Azure Machine Learning provides cloud-native ML orchestration with Azure integration.

- Clear.ml offers experiment management and orchestration for ML workflows.

- Comet.ml provides ML experiment tracking and orchestration capabilities.

- TFX (TensorFlow Extended) offers end-to-end ML pipeline orchestration for TensorFlow models.

Infrastructure & Container Orchestration

- Kubernetes serves as the foundation for most modern AI orchestration platforms. Provides container orchestration, service discovery, and resource management essential for scalable AI deployments.

- Apache NiFi specializes in data flow management with visual design tools for complex data processing pipelines.

- Docker enables containerization of AI models and dependencies for consistent deployment across environments.

Low-Code & Business-Oriented Platforms

- n8n provides workflow automation with AI integration capabilities. Offers visual workflow design and pre-built connectors for business applications.

- Smyth OS enables no-code AI agent design with drag-and-drop interfaces for creating orchestrated workflows.

- Zapier offers business workflow automation with growing AI integration capabilities.

- Microsoft Power Automate provides low-code workflow orchestration with AI services integration.

The Limitations of AI Orchestration

AI orchestration introduces powerful capabilities, but it also comes with real limitations. As systems grow more complex, so do the technical, security, and organizational challenges.

This section breaks down the key obstacles you’re likely to face when implementing or scaling AI orchestration.

Technical Complexities

- Integration & Interoperability Challenges arise from diverse data formats, incompatible standards, and proprietary APIs. Legacy systems often lack modern integration capabilities, requiring custom middleware or complete replacement.

- Scalability Issues emerge as orchestrated systems grow. Resource bottlenecks occur when multiple AI components compete for computing resources. Network latency between distributed components degrades performance. Database locks and synchronization issues create system-wide slowdowns.

- Model Versioning and Lifecycle Management become exponentially complex in orchestrated environments. When multiple systems depend on specific model versions, updates require coordinated changes across the entire ecosystem. Rollback procedures must account for dependencies and data consistency.

- Data Quality and Integration Problems multiply in orchestrated systems. Inconsistent data formats between systems cause processing errors. Data synchronization delays lead to components operating on outdated information. Data governance becomes critical when multiple AI systems access and modify shared datasets.

Security, Privacy & Compliance

- Risk of Data Breaches increases with system complexity. Each integration point represents a potential attack vector. Orchestrated systems processing sensitive data across multiple components expand the attack surface significantly.

- Model Misuse and Privacy Violations can occur when personal data flows between AI components without proper consent or anonymization. Cross-border data transfers in cloud-based orchestration raise additional regulatory concerns.

- Robust Encryption, Access Controls & Audit Trails require comprehensive security measures including encryption for data in transit and at rest, role-based access controls, and detailed audit trails for all system interactions.

- Ensuring Fairness and Transparency becomes challenging when multiple AI models contribute to decisions. Understanding how orchestrated systems reach conclusions requires sophisticated explainability tools and comprehensive documentation.

Lack of Standardization & Governance

- Absence of Common Frameworks makes integrating tools from different vendors difficult. Each platform uses proprietary configuration formats, APIs, and deployment mechanisms.

- Difficulty Coordinating Multiple Vendors arises from different update schedules, service level agreements, and support models that complicate system management.

- Emerging Initiatives like OpenAPI specifications for AI services and standardized model exchange formats show promise but haven't achieved widespread adoption.

Human & Organisational Challenges

- Skill Gaps in AI, software engineering, and change management represent significant barriers. Organizations need staff with expertise across multiple disciplines and the ability to coordinate teams across departments.

- Cross-Functional Collaboration requirements increase dramatically. Data scientists, software engineers, DevOps specialists, and domain experts must work closely together. Communication barriers and conflicting priorities can derail orchestration projects.

- Ethical Considerations become more complex in orchestrated systems. Human oversight requirements must be built into workflows. Bias detection and mitigation must account for interactions between different AI components.

Implementation Strategies and Best Practices

Building a successful AI orchestration system requires more than just choosing the right tools. It demands a structured implementation plan aligned with business goals, technical capabilities, and cross-functional collaboration. Use the following strategies to guide your rollout.

Start with Business Objectives and Decision Intelligence

Start by defining clear business goals and measurable outcomes. Focus on decisions you want the system to support. For example, if you're orchestrating customer support, set metrics like resolution time, escalation rate, and satisfaction score.

Involve stakeholders from the start. Marketing, operations, and engineering teams bring unique context that helps align technical design with real-world needs. Early alignment avoids scope creep and mismatched expectations.

Build around decision intelligence. Design your workflows to move from insight to action automatically, reducing manual handoffs.

Assess Data and Infrastructure Readiness

Before selecting a platform, assess your data quality and accessibility. Poor data breaks orchestration. Identify what needs cleaning, standardization, or syncing in real time.

Inventory your existing AI models, APIs, and infrastructure. Understand dependencies, data formats, and performance constraints. This gives you a clear picture of integration challenges and resource needs.

Spot gaps that could block scalability. Outdated databases, low network bandwidth, or limited compute will reduce orchestration effectiveness.

Choose the Right Platform and Tools

Select a platform that fits your current tech stack. Compatibility matters more than feature count. A tool that integrates cleanly will save time and reduce errors.

Evaluate scalability. A platform that works for a pilot may not handle enterprise workloads. Look ahead 2 to 3 years.

Check for built-in security and compliance features. Platforms should support encryption, access controls, and audit logging.

Consider vendor reliability and community support. Open-source tools with active communities often outlast closed systems from unstable vendors.

Design Modular, Scalable Architecture

Build with modularity in mind. Use microservices or event-driven architectures so components can scale independently and fail gracefully.

Adopt an API-first approach. Every service should expose well-documented APIs to simplify integration and future expansion.

Use containerization with Docker and orchestrate deployment using Kubernetes. This enables consistent performance across environments and makes it easier to manage updates.

Run Pilot Projects Before Scaling

Start small. Pick use cases with clear business value, like automating onboarding or fraud detection. Limit the scope to 2 or 3 integrated systems to reduce risk.

Select projects with visible outcomes. Quick wins build confidence and unlock buy-in for larger investments.

Use what you learn to refine your orchestration design. Don’t scale until your architecture and processes have been tested under pressure.

Expand gradually. Add components to existing workflows rather than launching multiple disconnected orchestration initiatives.

Build Cross-Functional Teams and Train Continuously

Orchestration requires collaboration across data science, engineering, operations, and business units. Get all stakeholders involved in the design and feedback cycles.

Train your team on orchestration tools and evolving AI practices. Tech shifts quickly, and your team needs to stay ahead.

Assign clear responsibilities. Define who owns model updates, monitors performance, handles outages, and enforces compliance.

Monitor, Optimize, and Govern

- Set up system monitoring and logging from day one: Track both technical metrics (like latency, error rates, and usage) and business KPIs (like ROI, satisfaction, and accuracy).

- Retrain models and tune infrastructure regularly: Use performance data to adjust routing, allocate resources, and keep outputs aligned with goals.

- Establish governance protocols: Create approval processes, access controls, and audit mechanisms to manage risk and ensure accountability.

Maintain strong documentation: As systems grow, clear records of workflows, dependencies, and escalation paths are critical for troubleshooting and handoffs.

FAQs

Begin with clear business objectives and a small pilot project integrating 2-3 AI systems. Assess current data quality and infrastructure readiness. Choose orchestration tools that integrate well with existing technology stacks. Start simple, measure results, and scale gradually based on lessons learned from initial implementations. Focus on delivering measurable business value quickly to build organizational support.

No. While orchestration is powerful for managing multiple models, it also adds value when coordinating a single model with other components like APIs, databases, or human workflows. Even basic setups benefit from orchestration’s monitoring, retry logic, and policy enforcement.

Yes, with the right architecture. Many platforms support event-driven execution and real-time inference. For latency-sensitive use cases like fraud detection or autonomous systems, orchestration frameworks must be optimized for low-latency routing and failover.

Use observability tools that log every agent action, model output, and system decision. Many orchestration frameworks integrate with logging stacks and expose dashboards for traceability. Always track technical metrics alongside business outcomes.